Hi,

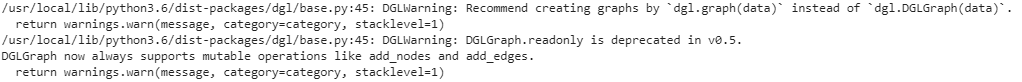

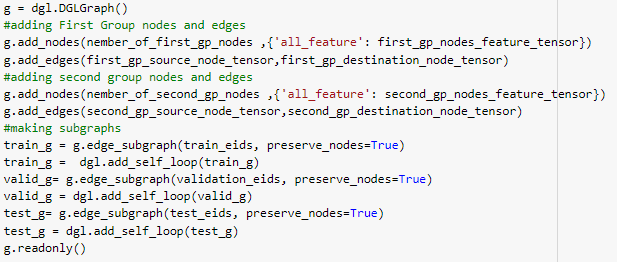

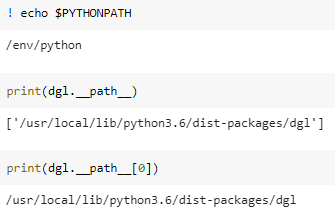

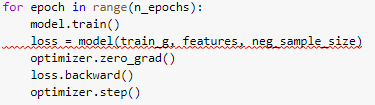

I have used GATConv layers for link prediction, and my code worked Properly until a few hours ago, but now I faced this warning and error.

My graph doesn’t have self_loop itself, so I added self-loop to the graph (but not using dgl.add_self_loop(g)) but the error is established. Also, the error says " Setting

allow_zero_in_degree to be True", but I don’t use DotGatConv to set this parameter. What should I do now?Thanks,