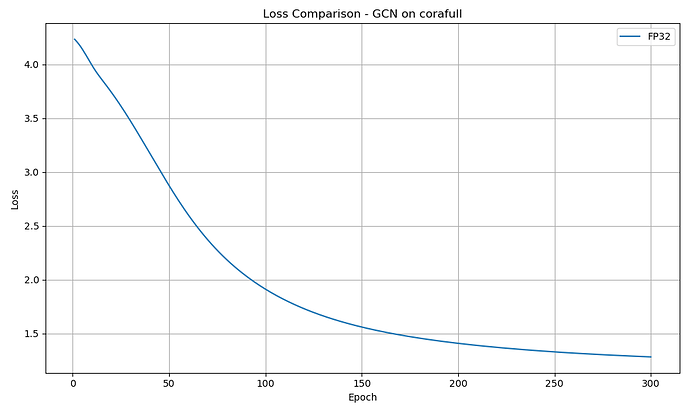

When I train a GCN model and a GAT model using the full graph without any sampling or dropout, and with the Adam optimizer, my loss curve steadily decreases without any fluctuations. This behavior is different from the typical loss curves observed in other neural network training processes. What could be the reason for this? Additionally, I would like to know more about how backpropagation and gradient updates are calculated in GNNs. Are there any resources available that explain this process?

Using the full graph for training without any randomness is analogous to full-batch gradient descent. At every step, you are computing the exact gradient that minimizes the loss across all the samples, so the loss is (almost) guaranteed to decrease given a sufficiently small learning rate, and the curve will naturally become smoother.

This article also explains the different behavior between full-batch gradient descent and minibatch gradient descent. An Introduction to Gradient Descent | by Yang | Towards Data Science

Thank you!

And i have another question. I use bf16 to train GCN and GAT model. In many dataset, model accuracy is same to using fp32 to train which include the large-scale graph “obgn-products”. Is this correct? So bf16 can usually be used in most GNN and not have loss on the accuracy? Could you tell me your experience.

This topic was automatically closed 30 days after the last reply. New replies are no longer allowed.