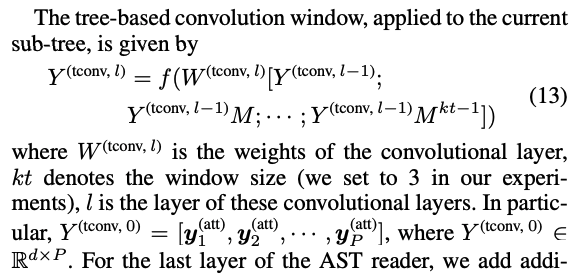

I was reading the paper Tree Gen (https://arxiv.org/pdf/1911.09983.pdf) and wanted to implement the TreeConvolution:

I was wondering if this was possible to be done with PyTorch Geometric or DGL or something like that or if the best way was to simply generate the adjacency matrix, multiply my vectors with it and then do a normal convolution?

cross-posted: