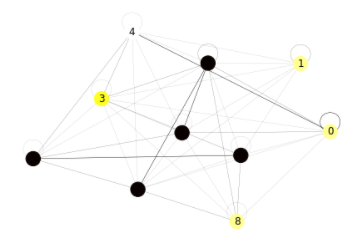

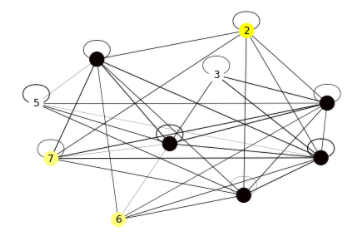

I’m trying to train a GCN with 1 graph at a time. (I need it for RL, where observations come in a sequence)

When I train it with 1 batched graph, it works fine

Epoch 00195 | Time(s) 0.0674 | Loss 0.0003 | ETputs(KTEPS) 519.32

Epoch 00196 | Time(s) 0.0674 | Loss 0.0056 | ETputs(KTEPS) 519.42

Epoch 00197 | Time(s) 0.0674 | Loss 0.0024 | ETputs(KTEPS) 519.47

Epoch 00198 | Time(s) 0.0674 | Loss 0.0181 | ETputs(KTEPS) 519.51

Epoch 00199 | Time(s) 0.0674 | Loss 0.0019 | ETputs(KTEPS) 519.57

When I try to use one graph at a time, it doesn’t.

for g in gs:

logits = model(g, features)

loss = loss_fcn(logits[train_mask], labels[train_mask])

losses.append(loss.item())

optimizer.zero_grad()

loss.backward()

optimizer.step()

Output:

Epoch 00196 | Time(s) 0.1517 | Loss 9.9473 | ETputs(KTEPS) 4.62

Epoch 00197 | Time(s) 0.1516 | Loss 10.5100 | ETputs(KTEPS) 4.62

Epoch 00198 | Time(s) 0.1515 | Loss 10.6043 | ETputs(KTEPS) 4.62

Epoch 00199 | Time(s) 0.1514 | Loss 10.0472 | ETputs(KTEPS) 4.62

I don’t know what I’m doing wrong. It looks like it’s resetting the weights with each new graph but I’m not sure. I’m this has come up in the past, but couldn’t find solutions.

Runnable code: