I’ve followed example of training tutorials (dgl/README.md at master · dmlc/dgl · GitHub). The standalone mode works well. Then moving on to distributed training. Setup is: two machines, and then start launch.py command from one of them. I’ve made sure ssh works between these two nodes (both directions).

Then got TimeOut error likes this (despite some successful messages):

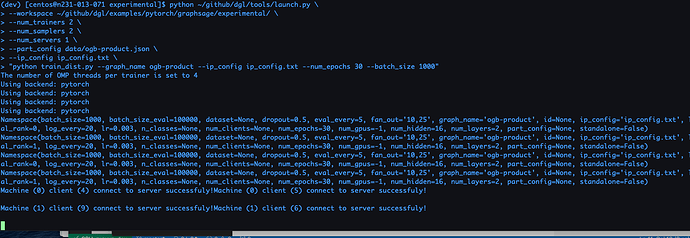

(dgl) [centos@n231-013-071 experimental]$ python ~/github/dgl/tools/launch.py --workspace ~/github/dgl/examples/pytorch/graphsage/experimental/ --num_trainers 1 --num_samplers 2 --num_servers 2 --part_config data/ogb-product.json --ip_config ip_config.txt “python train_dist.py --graph_name ogb-product --ip_config ip_config.txt --num_epochs 30 --batch_size 1000”

The number of OMP threads per trainer is set to 8

Using backend: pytorch

Using backend: pytorch

Namespace(batch_size=1000, batch_size_eval=100000, dataset=None, dropout=0.5, eval_every=5, fan_out=‘10,25’, graph_name=‘ogb-product’, id=None, ip_config=‘ip_config.txt’, local_rank=0, log_every=20, lr=0.003, n_classes=None, num_clients=None, num_epochs=30, num_gpus=-1, num_hidden=16, num_layers=2, part_config=None, standalone=False)

Namespace(batch_size=1000, batch_size_eval=100000, dataset=None, dropout=0.5, eval_every=5, fan_out=‘10,25’, graph_name=‘ogb-product’, id=None, ip_config=‘ip_config.txt’, local_rank=0, log_every=20, lr=0.003, n_classes=None, num_clients=None, num_epochs=30, num_gpus=-1, num_hidden=16, num_layers=2, part_config=None, standalone=False)

> Machine (1) client (4) connect to server successfuly!

> Machine (0) client (1) connect to server successfuly!

Traceback (most recent call last):

File “train_dist.py”, line 309, in

main(args)

File “train_dist.py”, line 256, in main

th.distributed.init_process_group(backend=‘gloo’)

File “/home/centos/anaconda3/envs/dgl/lib/python3.7/site-packages/torch/distributed/distributed_c10d.py”, line 446, in init_process_group

timeout=timeout)

File “/home/centos/anaconda3/envs/dgl/lib/python3.7/site-packages/torch/distributed/distributed_c10d.py”, line 521, in _new_process_group_helper

timeout=timeout)

> RuntimeError: [/tmp/pip-req-build-mvu0v2f8/third_party/gloo/gloo/transport/tcp/pair.cc:769] connect [fe80::f816:3eff:fe09:d41d]:23433: Connection timed out^C2021-04-19 05:45:03,779 INFO Stop launcher

Most likely I missed something in my setup. Looking into code to understand. Any suggestion which direction I should look into?

Thanks a lot!