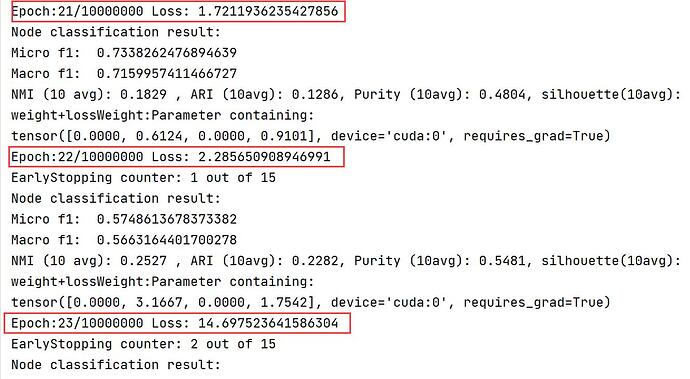

This is an unsupervised model for node classification of heterogeneous graph. I changed the framework from 0.4.3.post2 to 0.6.1 and found that the results of the model are completely different, even only half. In 0.6.1, there will be a sudden increase in the loss function. What is the reason for this?

Several questions:

- What is the unsupervised model you were running?

- Did you make any changes across versions?

- Could you post the code or provide a link to it?

- How large is the performance gap?

When training the model, the loss is normal at the beginning, but then there will be a sudden increase in the loss, with the following warning.

/root/yes/envs/SPGCN/lib/python3.6/site-packages/sklearn/linear_model/_logistic.py:765: ConvergenceWarning: lbfgs failed to converge (status=1):

STOP: TOTAL NO. of ITERATIONS REACHED LIMIT.

But I didn’t find this function in my project code.

This is the model I use, without any modification to the code.

This topic was automatically closed 30 days after the last reply. New replies are no longer allowed.