When run the example code train_ppi.py, I found my training accuracy dropping dramatically during the last epochs.

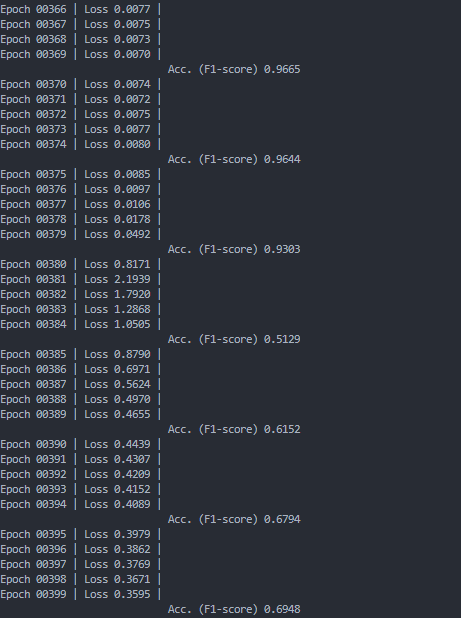

As shown below:

I tried many times but the result was the same. Accuracy is almost halved during last few epochs.

my environment

os: ubuntu20

python: 3.8.15

backen: pytorch ‘1.11.0+cu113’

dgl: ‘0.9.1post1’

gpu: NVIDIA GeForce RTX 3090

cuda: 11.7

Thanks for your help!