Hello All. I am new in the graph and would appreciate if anyone could help.

I want to classify graph nodes as odd or even based on the feature value of each node. the features are randomly integer numbers between 1 to 100.

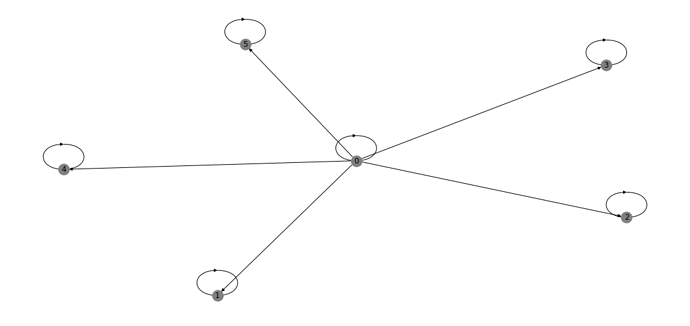

the base graph is like this

base_graph = dgl.graph(([0, 0, 0, 0, 0], [1, 2, 3, 4, 5]))

base_graph=base_graph.add_self_loop()

first I define a function that generates a batch of the same graphs and applies a random number to each node.

def generate_graphs(num_):

list_g=[]

list_label=[]

list_rnd=[]

for _ in range(num_):

g=copy.deepcopy(base_graph)

rnd_num=torch.randint(0,1000,(1,))

g.ndata['x']=torch.ones(6, 1)*rnd_num

list_g.append(g)

list_label.append(torch.tensor(int((rnd_num*6)%4)))

list_rnd.append(rnd_num)

return list_g,list_label,list_rnd

I also used a simple model:

class GCN(nn.Module):

def __init__(self, in_feats, h_feats, num_classes):

super(GCN, self).__init__()

self.conv1 = dgl.nn.pytorch.GraphConv(in_feats, h_feats)

self.conv2 = dgl.nn.pytorch.GraphConv(h_feats, num_classes)

def forward(self, g, in_feat):

h = self.conv1(g, in_feat)

h = torch.relu(h)

h = self.conv2(g, h)

return h

and trained like this.

model=GCN(1,16,2)

optimizer = torch.optim.Adam(model.parameters(), lr=0.01)

loss_fn = nn.CrossEntropyLoss()

for epoch in range(1000):

model.train()

list_g,list_label,_=generate_graphs(64)

# pdb.set_trace()

g=dgl.batch(list_g)

node_features=g.ndata['x']

logits = model(g, node_features)

# loss=nn.functional.cross_entropy(logits,torch.tensor(list_label).long())

loss=F.nll_loss(F.log_softmax(logits,1),list_label)

optimizer.zero_grad()

loss.backward()

optimizer.step()

if epoch%100==0:

print('Epoch %d | Loss: %.4f' % (epoch, loss.item()))

but the model is not learning at all.