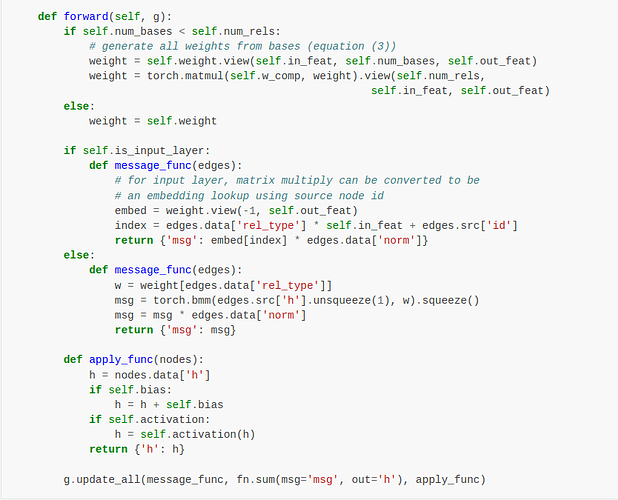

In the given code at https://docs.dgl.ai/tutorials/models/1_gnn/4_rgcn.html I can’t really understand how the weights are created from the weight bases. Why do we change the shape first and use matmul then? What is the underlying idea?

And also in the message function for the input layer, why do we calculate the message as

embed = weight.view(-1, self.out_feat)

index = edges.data['rel_type'] * self.in_feat + edges.src['id']

return {'msg': embed[index] * edges.data['norm']}

? What is the underlying idea? Why aren’t there node features coming from the data?

I am really new to the subject so sorry if these are really trivial questions.