I am new to dgl and currently reading the tutorial. In the implementation of tree-lstm:

https://docs.dgl.ai/en/latest/tutorials/models/2_small_graph/3_tree-lstm.html

class TreeLSTMCell(nn.Module):

def __init__(self, x_size, h_size):

super(TreeLSTMCell, self).__init__()

self.W_iou = nn.Linear(x_size, 3 * h_size, bias=False)

self.U_iou = nn.Linear(2 * h_size, 3 * h_size, bias=False)

self.b_iou = nn.Parameter(th.zeros(1, 3 * h_size))

self.U_f = nn.Linear(2 * h_size, 2 * h_size)

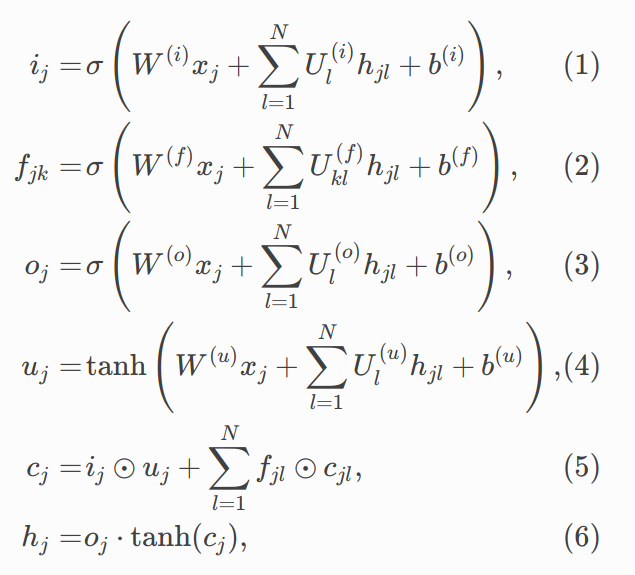

I do not understand why the shape of the variables (i.e., U_iou, U_f) should be 2 * h_size ? If we compare it with the original math equations (i.e., Equations 1-4) Shouldn’t it be h_size?