Hi, Thank you very much for your time to read my question . I was reading about prop_nodes just now and havent yet totally understood .

g.prop_nodes([[2,3],[4]] , fn.copy_src('x', 'm'), fn.sum('m', 'x'))

g.prop_nodes([[2,3]] , fn.copy_src('x', 'm'), fn.sum('m', 'x'))

g.prop_nodes([4] , fn.copy_src('x', 'm'), fn.sum('m', 'x'))

^^ are these two the same ?

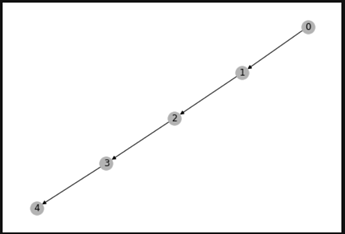

Also given I have a directed graph . what would be the easiest method to access earlier node features (read some posts about k-hop but unsure of its implementation…), as simple as accessing last node feature . like at node 4, to get node 3 and node 2 and node 1’s node feature, can I send message from one node to another node that is not direct successor, (given there are edges in between them.)

def f_edge(edges):

return {'h': edges.src['x']}

Thank you very much for your time !