Hi,

I have been looking into PinSage Pytorch example code and noticed that there are some differences between the implementation and the paper.

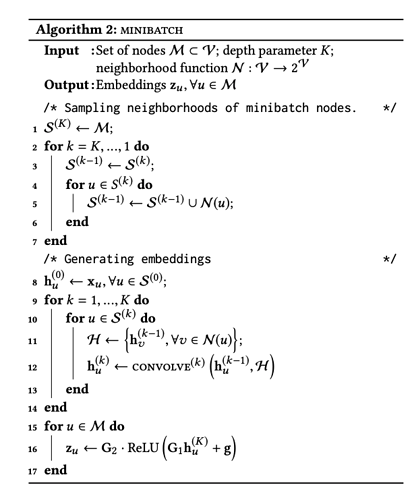

Regarding generating embedding vectors for nodes, paper provides the following “algorithm” (shown in the screenshot)

There, you can see after applying two GCN layers (‘CONVOLVE’ operations), another fully connected dense layer is applied (Line 15 to Line 17 in the screenshot).

In the code, I see the following implementation which I am not able to map any step in the paper. Especially I can’t understand the purpose of “return h_item_dst + self.sage(blocks, h_item)” line in get_repr function. Why are the node features of “DST” nodes are added to output of GCN layers?

class PinSAGEModel(nn.Module):

def __init__(self, full_graph, ntype, textsets, hidden_dims, n_layers):

super().__init__()

self.proj = layers.LinearProjector(full_graph, ntype, textsets, hidden_dims)

self.sage = layers.SAGENet(hidden_dims, n_layers)

self.scorer = layers.ItemToItemScorer(full_graph, ntype)

def forward(self, pos_graph, neg_graph, blocks):

h_item = self.get_repr(blocks)

pos_score = self.scorer(pos_graph, h_item)

neg_score = self.scorer(neg_graph, h_item)

return (neg_score - pos_score + 1).clamp(min=0)

def get_repr(self, blocks):

h_item = self.proj(blocks[0].srcdata)

h_item_dst = self.proj(blocks[-1].dstdata)

return h_item_dst + self.sage(blocks, h_item)