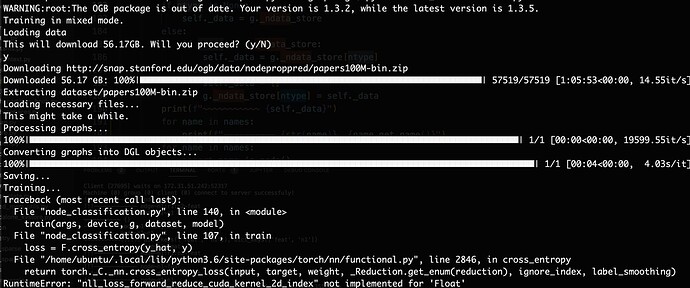

I am not able to download and run papers100m, which was not a problem in August. The error is as following:

cc@exp:~$ python3 dgl/examples/pytorch/graphsage/node_classification_1.py

Training in mixed mode.

Loading data

Traceback (most recent call last):

File "/usr/lib/python3.8/urllib/request.py", line 1354, in do_open

h.request(req.get_method(), req.selector, req.data, headers,

File "/usr/lib/python3.8/http/client.py", line 1256, in request

self._send_request(method, url, body, headers, encode_chunked)

File "/usr/lib/python3.8/http/client.py", line 1302, in _send_request

self.endheaders(body, encode_chunked=encode_chunked)

File "/usr/lib/python3.8/http/client.py", line 1251, in endheaders

self._send_output(message_body, encode_chunked=encode_chunked)

File "/usr/lib/python3.8/http/client.py", line 1011, in _send_output

self.send(msg)

File "/usr/lib/python3.8/http/client.py", line 951, in send

self.connect()

File "/usr/lib/python3.8/http/client.py", line 922, in connect

self.sock = self._create_connection(

File "/usr/lib/python3.8/socket.py", line 787, in create_connection

for res in getaddrinfo(host, port, 0, SOCK_STREAM):

File "/usr/lib/python3.8/socket.py", line 918, in getaddrinfo

for res in _socket.getaddrinfo(host, port, family, type, proto, flags):

socket.gaierror: [Errno -2] Name or service not known

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "dgl/examples/pytorch/graphsage/node_classification_1.py", line 135, in <module>

dataset = AsNodePredDataset(DglNodePropPredDataset('ogbn-papers100M'))

File "/home/cc/ogb/ogb/nodeproppred/dataset_dgl.py", line 69, in __init__

self.pre_process()

File "/home/cc/ogb/ogb/nodeproppred/dataset_dgl.py", line 98, in pre_process

if decide_download(url):

File "/home/cc/ogb/ogb/utils/url.py", line 12, in decide_download

d = ur.urlopen(url)

File "/usr/lib/python3.8/urllib/request.py", line 222, in urlopen

return opener.open(url, data, timeout)

File "/usr/lib/python3.8/urllib/request.py", line 525, in open

response = self._open(req, data)

File "/usr/lib/python3.8/urllib/request.py", line 542, in _open

result = self._call_chain(self.handle_open, protocol, protocol +

File "/usr/lib/python3.8/urllib/request.py", line 502, in _call_chain

result = func(*args)

File "/usr/lib/python3.8/urllib/request.py", line 1383, in http_open

return self.do_open(http.client.HTTPConnection, req)

File "/usr/lib/python3.8/urllib/request.py", line 1357, in do_open

raise URLError(err)

urllib.error.URLError: <urlopen error [Errno -2] Name or service not known>

I saw similar issues and followed the suggestion there to change the proxy but it did not work for me. Using the mainland proxy gave me ConnectionRefusedError: [Errno 111] Connection refused; using the IP of the virtual machine or my physical IP gave urllib.error.URLError: <urlopen error [Errno 110] Connection timed out>. I am wondering how I can solve this. Thanks in advance!