Hi @mufeili , I am using the Graph auto-encoder (GAE) model to reconstruct the input graphs. If the input is adjacency (A_i) with features (X_i), the output would be a new adjacency (A_o). The reconstruction loss is calculated by comparing A_i and A_o.

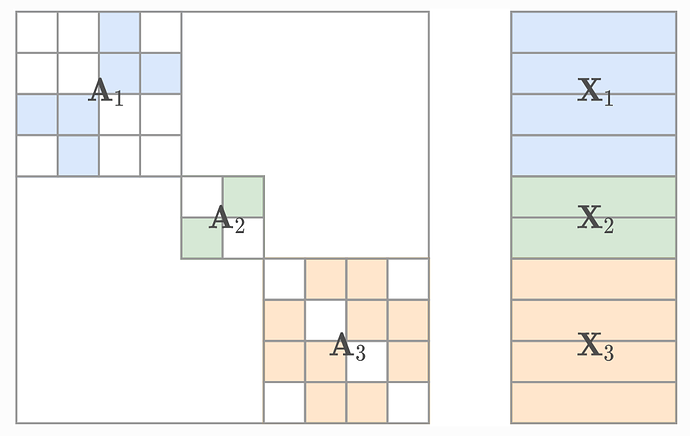

My question is about the batched graphs in DGL. If the batched graph contains 3 graphs (as shown in the image), then the loss should be calculated on only the relevant parts (i.e, A1, A2, A3) of the batched adjacency matrix. The white space outside the individual A1, A2, and A3 should be ignored while training because they are disjoint. How do I do this in DGL batched training? Or, Is it handled automatically by DGL? Can we mask edges?