I am using UK2006 to simulate GraphSage training, but I encounter a ‘Segmentation fault (core dumped)’ error when I try to load the data using dataloader. I’m not sure what’s causing this issue, and I would appreciate some assistance. Additionally, I’m running the program on a machine with an A100 GPU that has 500GB of memory, so I think it’s not a memory issue.

path = "/home/xxx/workspace/data"

dataset = "uk-2006-05"

graphbin = "%s/%s/graph.bin" % (path,dataset)

labelbin = "%s/%s/labels.bin" % (path,dataset)

featsbin = "%s/%s/feats_%d.bin" % (path,dataset,100)

edges = np.fromfile(graphbin,dtype=np.int32)

srcs = torch.tensor(edges[::2])

dsts = torch.tensor(edges[1::2])

g = dgl.graph((srcs,dsts))

feats = np.fromfile(featsbin,dtype=np.float32).reshape(-1,100)

feats_tmp = feats[:77741023]

label = np.fromfile(labelbin,dtype=np.int64)

label= label[:77741023]

g.ndata['feat'] = torch.tensor(feats_tmp)

g.ndata['label'] = torch.tensor(label)

trainnum = int(77741023 * 0.01)

train_idx = np.arange(trainnum,dtype=np.int32)

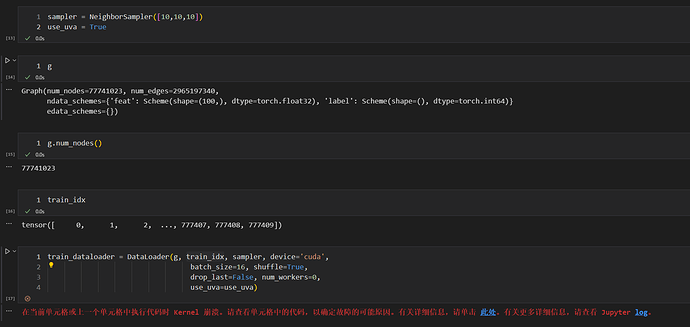

sampler = NeighborSampler([10,10,10])

use_uva = True

print("flag...")

train_dataloader = DataLoader(g, train_idx, sampler, device='cuda',

batch_size=16, shuffle=True,

drop_last=False, num_workers=0,

use_uva=use_uva)

error msg:

flag...

Segmentation fault (core dumped)