Hi, I am working with large homogenous graphs (2M+ nodes) with sparse features.

In order to fit my features in memory I want to store the features using sparse matrices.

Is using sparse matrices (torch sparse tensor or dgl sparse matrix) currently supported for node features and message passing?

Here is an example of what I am trying to do:

feat_sp = dglsp.from_torch_sparse(feat)

graph.ndata['h'] = feat_sp

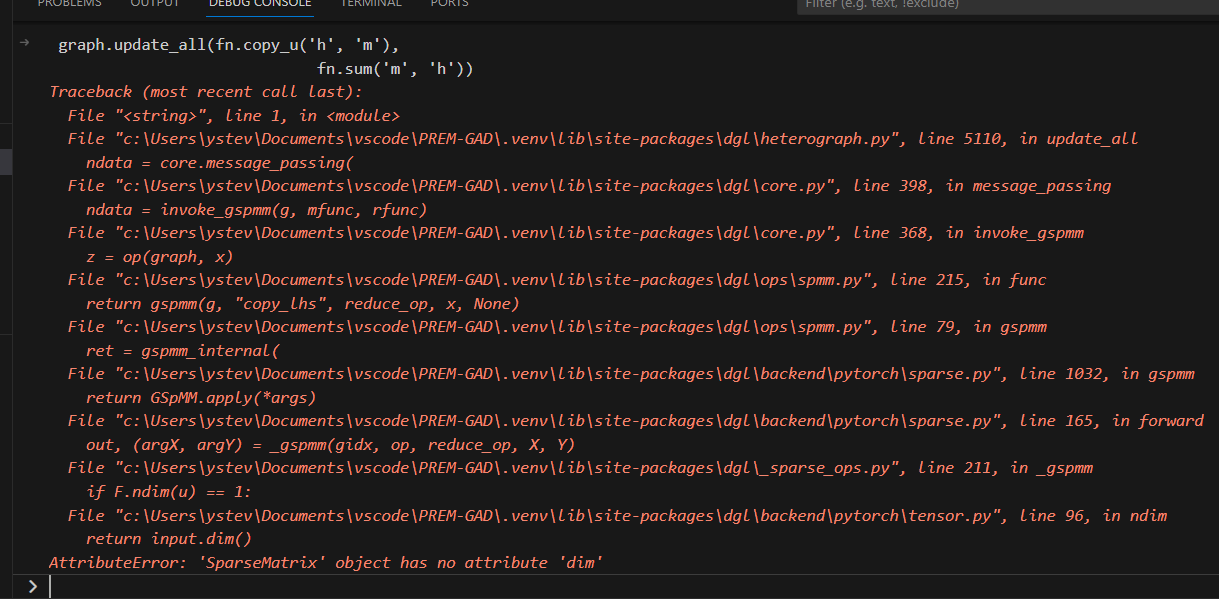

graph.update_all(fn.copy_u('h', 'm'),

fn.sum('m', 'h'))

When I run this I get an error:

Thanks!