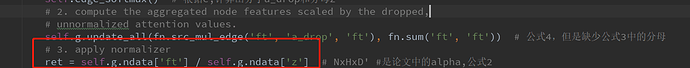

In the implementation of GAT, you apply normalizer after computing the aggregated node features as following:

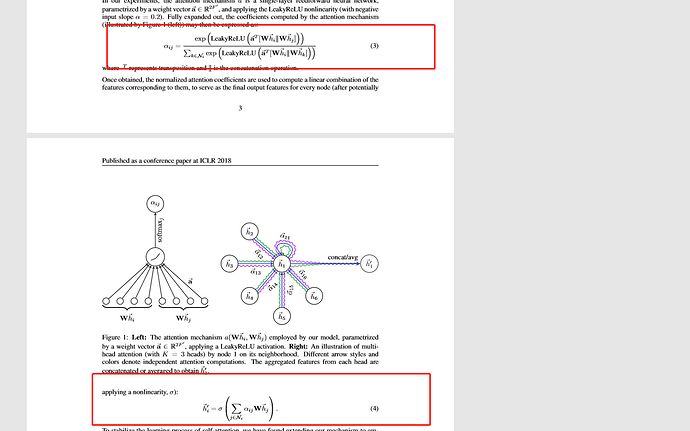

However, in the original paper, the authors apply normalizer before computing the aggregated node features as following:

I wonder why you adjust the order?

By the way, does the GAT model converges for the dataset cora?

I run the model by python train.py --dataset=cora. And the result is the following: