I have DGL working perfectly fine in a distributed setting using default num_worker=0 (which does sampler without a pool my understanding). Now I am extending it to using multiple samplers for higher sampling throughput.

In the server process, I did this:

start_server():

os.environ[“DGL_DIST_MODE”] = “distributed”

os.environ[“DGL_ROLE”] = “server”

os.environ[“DGL_SERVER_ID”] = str(self._rank)

g = DistGraphServer( … , disable_shared_mem=False,)

g.start()

In the training/client process, I did this:

def sagemain(ip_config_file, local_partition_file, args, rank):

os.environ[“DGL_DIST_MODE”] = “distributed”

os.environ[“DGL_ROLE”] = “client”

os.environ[‘DGL_NUM_SAMPLER’] = “3”

dgl.distributed.initialize(ip_config_file, num_worker=3)pb, _, _, _ = load_partition_book(local_partition_file, rank) g = DistGraph(args.graph_name, gpb=pb) model training starts from here

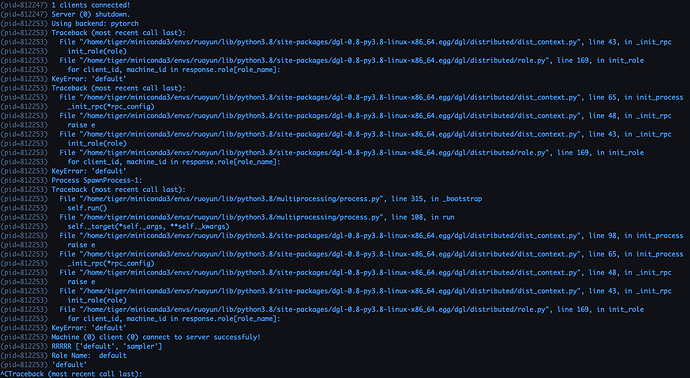

I pretty much followed what is done in launch.py. Then we run into error like this, complaining about key missing for ‘default’ role:

What needs to be done for this ‘default’ role? Searched doc and code but didn’t find anything helpful so far. In general, how to set up multiple-sampler properly for distributed environment? Any suggestions?

Thanks a lot!