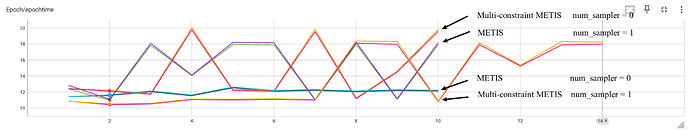

I train GraphSAGE with ogbn-products dataset in two manchies.

it seems that the epoch times in the following four experiments do not differ much.

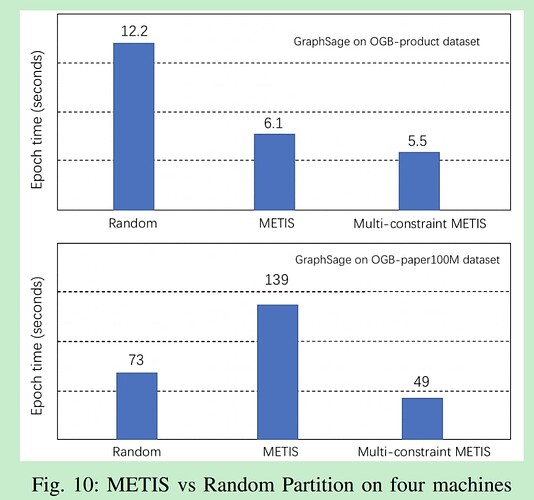

how can I get a similar conclusion as Fig. 10 in paper DistDGL: Distributed graph neural network training for billion-scale graphs.

The time varies within a certain range.

Are 6.1 and 5.5 obtained by averaging?