Hi All,

I am trying to implement LightGCN, a recommendation model using graph, with DGL. So it is a link prediction task on large heterograph with EdgeDataLoader, which samples some blocks for batch training, but causes that the output shape of every layer is different.

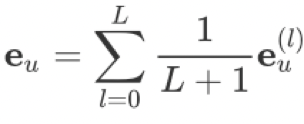

How to get final node embeddings combine all the layers?exactly I want to get:

class StochasticTwoLayerRGCN(nn.Module):

def __init__(self, in_feat, hidden_feat, out_feat, rel_names):

super().__init__()

self.conv1 = dglnn.HeteroGraphConv({

rel : dglnn.GraphConv(in_feat, hidden_feat, norm='right')

for rel in rel_names

})

self.conv2 = dglnn.HeteroGraphConv({

rel : dglnn.GraphConv(hidden_feat, out_feat, norm='right')

for rel in rel_names

})

def forward(self, blocks, x):

"""

how to combine different layers' output ?

"""

print(x['item'].shape) #torch.Size([91599, 64])

x = self.conv1(blocks[0], x)

print(x['item'].shape) #torch.Size([91054, 64])

print(blocks[0])

"""

Block(num_src_nodes={'item': 91599, 'user': 52643},

num_dst_nodes={'item': 91077, 'user': 52380},

num_edges={('item', 'iu', 'user'): 2977926, ('user', 'ui', 'item'): 2977966},

metagraph=[('item', 'user', 'iu'), ('user', 'item', 'ui')])

"""

x = self.conv2(blocks[1], x)

print(x['item'].shape) #torch.Size([10796, 64])

print(blocks[1])

"""

Block(num_src_nodes={'item': 91077, 'user': 52380},

num_dst_nodes={'item': 10736, 'user': 10094},

num_edges={('item', 'iu', 'user'): 975459, ('user', 'ui', 'item'): 650950},

metagraph=[('item', 'user', 'iu'), ('user', 'item', 'ui')])

"""

return x