Hi to everyone!

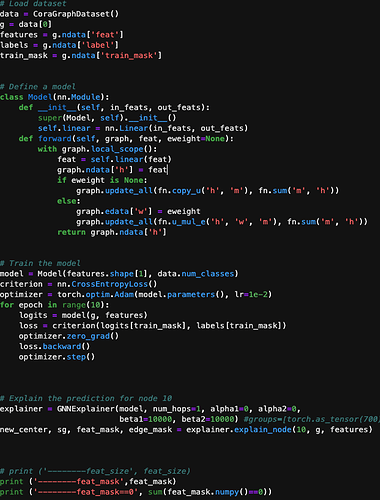

I have tried to increase the beta1 and beta 2 to larger values in order to get sparse feature importance vector, but no matter how large the two parameters are, the feature importance are not sparse.

Does ‘sparse’ mean the feature importance should have zeros? If so, why GNNExplainer fail to output sparse results?

Thanks a lot!