I have used the gat Model to obtain embeddings of nodes, but the loss is very high and doesn’t decrease during training, including it has nothing to do with the AUPR of the training in each epoch. It should be noted that I use loss in my model, which is introduced in Graphsage for link prediction. what is the reason plus what should I do?

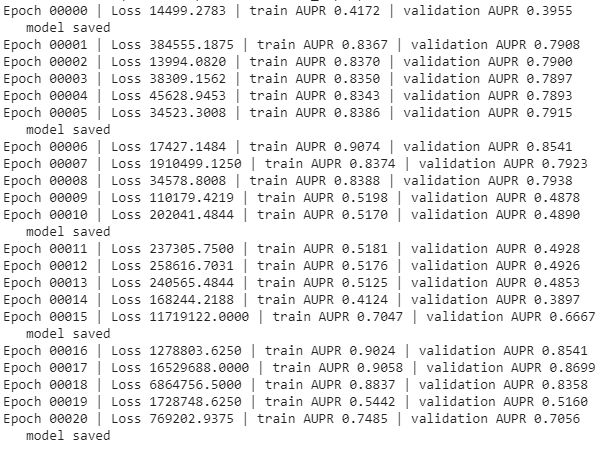

Part of loss and AUPR is shown.

thanks

How did you choose the hyperparameters? Did you add self loops to your graphs? Self loops can be important for numerical stability with multi-head attention.

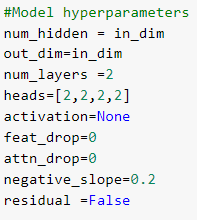

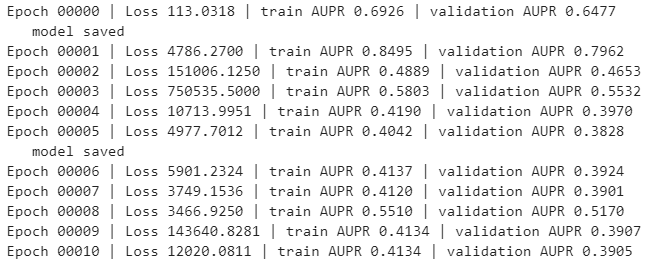

I had not used the self-loop before, but now I have added this, and the result is that there is still no logical relationship between the loss and the AUPR of the training data. Hyperparameters are also as follows.

I have no idea why this happened.it also gives me the following warning at the beginning of the training. I do not know if it’s important or not.

What’s your learning rate? Does a smaller learning rate help? Also it looks strange to me that your heads has length 4 while the num_layers is 2. Typically they should be the same.

Your loss values look weird as the magnitudes are very large. Usually that indicates numerical instability, bugs, or inappropriate training. What was your loss function? If your code was based on one of our examples then pointing us to the example will also be helpful.

Thanks.

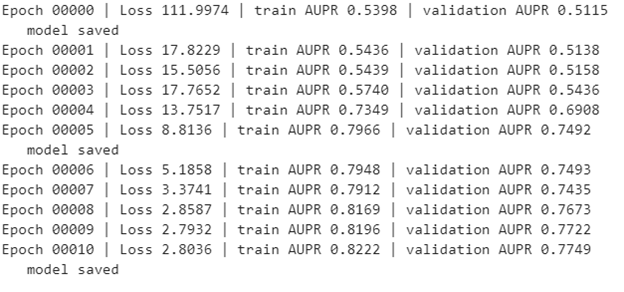

I decrease the learning rate from 2e-3 to 1e-4 and it seems to work well as shown as follows.

‘num_layer’ is the number of hidden layers and we have one input and output layer too.

thanks a lot.

yes, it was weird and I think it was because of a large learning rate.

I have used NCE_loss of Graphsage example.