Hello. I’m making Graph classification model using EdgeGATConv layer. I’m trying to understand every step but it is hard. I have several questions with my code and I need some help of experts ![]() . Here is my Code.

. Here is my Code.

1. Data Load

I sucessfully loaded data from my csv files.

dataset = dgl.data.CSVDataset('./GC')

len(dataset)

#14513

graph0, data0 = dataset[0]

print(graph0)

'''

Graph(num_nodes = 553, num_edges = 737,

ndata_schemes = {'feat' : Scheme(shape=(3,), dtype=torch.float32)}

edata_schemes = {'feat' : Scheme(shape=(2,), dtype=torch.float32)}

'''

print(data0)

#{'feat' : tensor([5790.]), 'label' : tensor([1])}

2. Train / Test split, Batch

Then I split the data as train / test and batch.

from dgl.dataloading import GraphDataLoader

from torch.utils.data.sampler import SubsetRandomSampler

num_data = len(dataset)

num_train = int(num_data * 0.8)

train_sampler = SubsetRandomSampler(torch.arange(num_train))

test_sampler = SubsetRandomSampler(torch.arange(num_train, num_data))

train_dataloader = GraphDataLoader(

dataset,

sampler = train_sampler,

batch_size = 100,

drop_last = False)

test_dataloader = GraphDataLoader(

dataset,

sampler = test_sampler,

batch_size = 100,

drop_last = False)

3. Modeling

Then I made model like this.

class EdgeGATModel(nn.Module):

def __init__(self, in_feats, edge_feats, hidden_feats, out_feats, num_heads):

super(EdgeGATModel, self).__init__()

self.edge_gat1 = EdgeGATConv(in_feats=in_feats,

edge_feats=edge_feats,

out_feats=hidden_feats,

num_heads=num_heads)

self.edge_gat2 = EdgeGATConv(in_feats=num_heads * hidden_feats,

edge_feats=edge_feats,

out_feats=hidden_feats,

num_heads=num_heads)

self.classify = nn.Linear(hidden_feats * num_heads, 1)

def forward(self, graph, node_feats, edge_feats, num_heads):

hidden1 = self.edge_gat1(graph, node_feats, edge_feats)

hidden1 = hidden1.view(hidden1.shape[0], -1)

hidden1 = F.leaky_relu(hidden1)

hidden2 = self.edge_gat2(graph, hidden1, edge_feats)

hidden2 = hidden2.view(hidden2.shape[0], -1)

hidden2 = F.leaky_relu(hidden2)

hidden2 = self.classify(hidden2)

return hidden2

4. Training and Evaluating

def train(model, dataloader):

model.train()

for batched_graph, labels in dataloader:

batched_graph = dgl.add_self_loop(batched_graph)

optimizer.zero_grad()

pred = model(batched_graph, batched_graph.ndata['feat'], batched_graph.edata['feat'], num_heads)

loss = criterion(pred, labels.float())

loss.backward()

optimizer.step()

# Code for evaluation

def evaluate(model, dataloader):

model.eval()

num_correct = 0

num_tests = 0

for batched_graph, labels in dataloader:

batched_graph = dgl.add_self_loop(batched_graph)

with torch.no_grad():

pred = model(batched_graph, batched_graph.ndata['feat'], batched_graph.edata['feat'], num_heads)

pred_labels = (torch.sigmoid(pred) >= 0.5).squeeze().long()

num_correct += (pred_labels == labels).sum().item()

num_tests += len(labels)

accuracy = num_correct / num_tests

return accuracy

# Create train and test dataloaders using SubsetRandomSampler

train_sampler = SubsetRandomSampler(torch.arange(num_train))

test_sampler = SubsetRandomSampler(torch.arange(num_train, num_data))

train_dataloader = GraphDataLoader(dataset, sampler=train_sampler, batch_size=100, drop_last=False)

test_dataloader = GraphDataLoader(dataset, sampler=test_sampler, batch_size=100, drop_last=False)

# Initialize your model

model = EdgeGATModel(in_feats=3, edge_feats=2, hidden_feats=64, out_feats=1, num_heads=3)

# Define your loss function

criterion = nn.BCEWithLogitsLoss()

# Define your optimizer

optimizer = torch.optim.Adam(model.parameters(), lr=0.001)

# Perform training for epochs

from tqdm import tqdm

for epoch in tqdm(range(10), desc="Training Epochs"):

train(model, train_dataloader)

accuracy = evaluate(model, test_dataloader)

print(f"Epoch: {epoch+1}, Test accuracy: {accuracy}")

1st question :

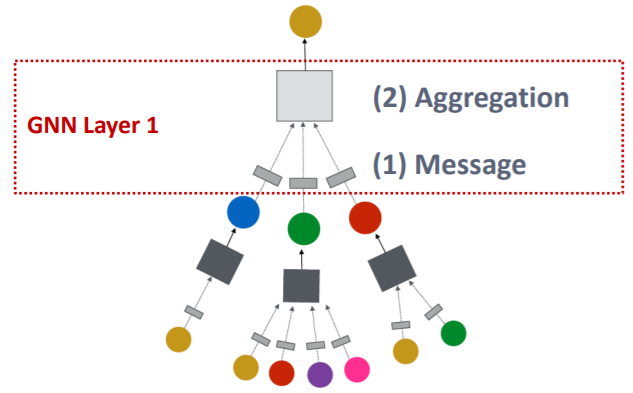

My goal is to make 2-step layer like this.

Is it right to make layers like what I did? I’m not sure about the multi - head attention concept.

EX) 8 nodes with 20 featrues (8,20) → multi head(3) attention with 15 hidden features → (8, 3, 15) → reshape → (8, 45) → Average ? or just use this as input of layer2 ?

2nd question:

I’m facing error with loss calculation. How should I fix the code to make same shape of my pred and label?

Thanks for reading. I really appreciate for any apply or advice.