There is a very [large graph] in my code and I am trying to break it [into sub-graph]

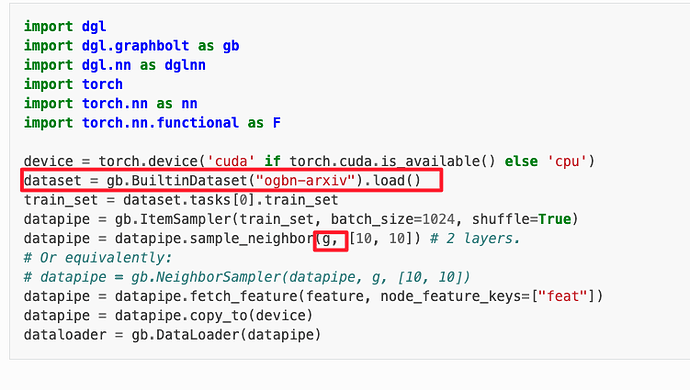

A sad story is that when I try this over my graph:

my_graph = dgl.graph(edge_list, idtype=dtype)

item_set = gb.ItemSet(torch.arange(my_graph.num_nodes(), dtype=torch.int32), names="seed_nodes")

This does not work

I checked more in Docs and found:

Ok, the graph type may be wrong…

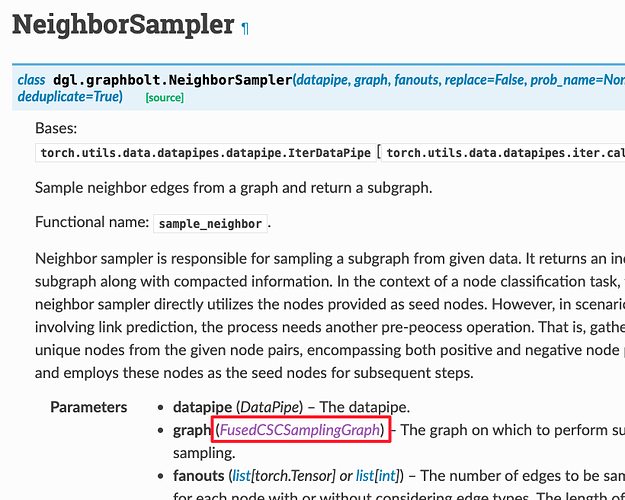

Here is the question, doc:

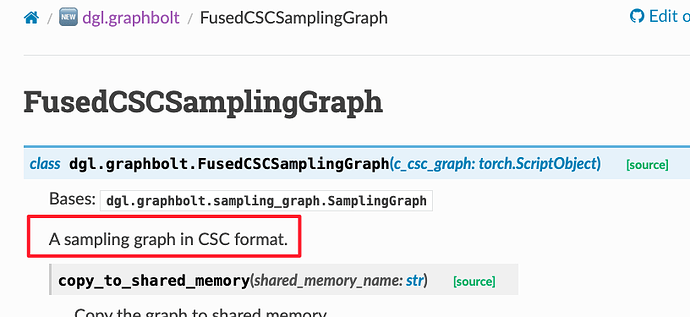

So confused, need more explanation and help, to convert [my graph] into this [CSC graph]

Grateful