In the API documentation :

https://docs.dgl.ai/api/python/nn.pytorch.html?highlight=gatconv#dgl.nn.pytorch.conv.GATConv

the API GATConv doesn’t contain a parameter called num-hidden

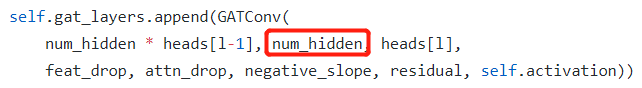

however, in the GAT example of pytorch, there is a num-hidden

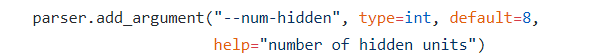

Besides, the argparse part in train.py use ‘num-hidden’ rather than ‘num_hidden’, but the code still works, why was that?

I’m confused about the meaning of these parameter , whether is it needed and are ‘num-hidden’ and ‘num_hidden’ the same?