Hi team,

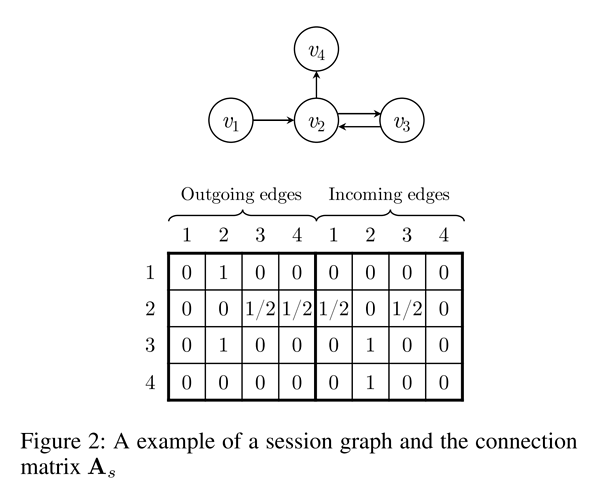

I was working on reproducing Gated Graph Neural Network. In the work, the adjacency A should be the concatenation of out-going adjacency and in-going adjacency. Then, feed A into GatedGraphConv.

I have some code snippets on building separate in-going graph and out-going graph:

import numpy as np

import networkx as nx

import dgl

A_out = np.array([[0, 1, 0, 0],

[0, 0, 0.5, 0.5],

[0, 1, 0, 0],

[0, 0, 0, 0]])

A_in = np.array([[0, 0, 0, 0],

[0.5, 0, 0.5, 0],

[0, 1, 0, 0],

[0, 1, 0, 0]])

# We could not concat A_out and A_in into networkx.graph, for non-square adjacency error

# Transform to networkx.graph

nx_g_out = nx.from_numpy_array(A_out)

nx_g_in = nx.from_numpy_array(A_in)

# nx_g_out = {0: {1: {'weight': 1.0}}, 1: {0: {'weight': 1.0}, 2: {'weight': 1.0}, 3: {'weight': 0.5}}, 2: {1: {'weight': 1.0}}, 3: {1: {'weight': 0.5}}}

# nx_g_in = {0: {1: {'weight': 0.5}}, 1: {0: {'weight': 0.5}, 2: {'weight': 1.0}, 3: {'weight': 1.0}}, 2: {1: {'weight': 1.0}}, 3: {1: {'weight': 1.0}}}

# Transform to dgl.graph

dgl_g_out = dgl.from_networkx(nx_g_out)

dgl_g_in = dgl.from_networkx(nx_g_in)

# The adjacency output of dgl_g_out and dgl_g_in is the same, as following

'''

tensor(indices=tensor([[0, 1, 1, 1, 2, 3],

[1, 0, 2, 3, 1, 1]]),

values=tensor([1., 1., 1., 1., 1., 1.]),

size=(4, 4), nnz=6, layout=torch.sparse_coo)

tensor(indices=tensor([[0, 1, 1, 1, 2, 3],

[1, 0, 2, 3, 1, 1]]),

values=tensor([1., 1., 1., 1., 1., 1.]),

size=(4, 4), nnz=6, layout=torch.sparse_coo)

'''

I was wondering how to concat the out-going adjacency and in-going adjacency to generate the graph for GatedGraphConv?