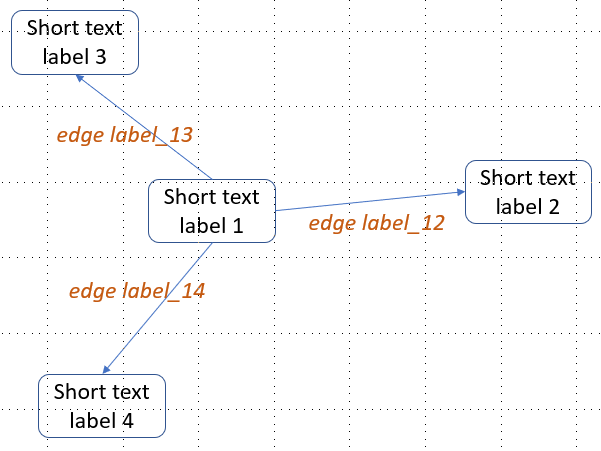

Hi. I have many small knowledge graphs, each has just few nodes (10-20) and each node/edge has textual label (see the attached image). I want to do contrastive learning with graph embedding. So I need to create graph embedding for each small knowledge graph with its node/edge features generated from sentence embedding (e.g. BERT). Could anyone tell me how can I do that with DGL library? Thank you.

Perhaps this tutorial helps: Training a GNN for Graph Classification — DGL 0.9.0 documentation

You can also find some examples on contrastive learning here: dgl/examples at master · dmlc/dgl · GitHub

Also you will need to initialize node/edge representations from text, e.g., with a BERT model.

1 Like

This topic was automatically closed 30 days after the last reply. New replies are no longer allowed.