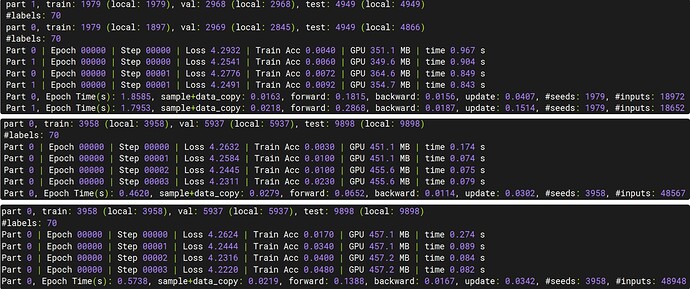

I’m trying to implement distributed learning using DistDGL. dgl/examples/distributed/graphsage at master · dmlc/dgl · GitHub I implemented the README.md at this address verbatim.

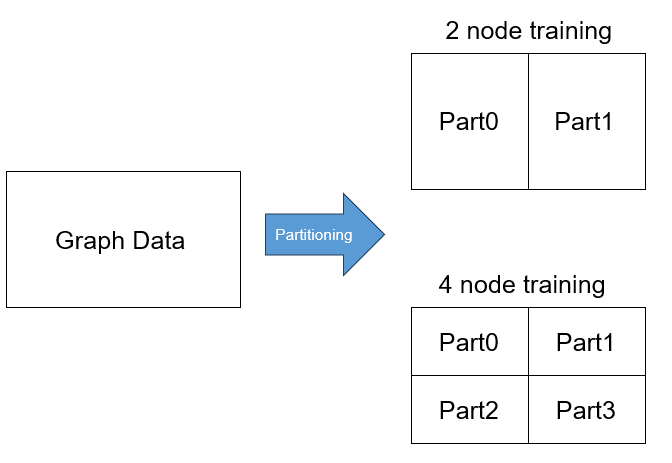

In the process of comparing distributed learning on two and four nodes, there is a question about the time taken for each train epoch.

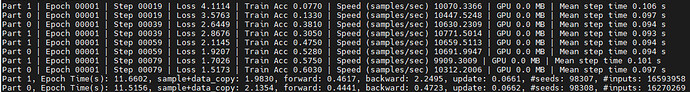

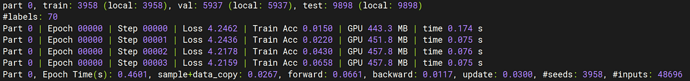

This is the train epoch information of two nodes

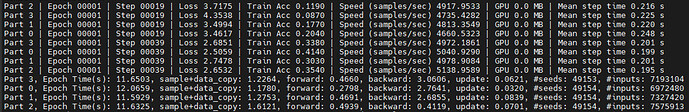

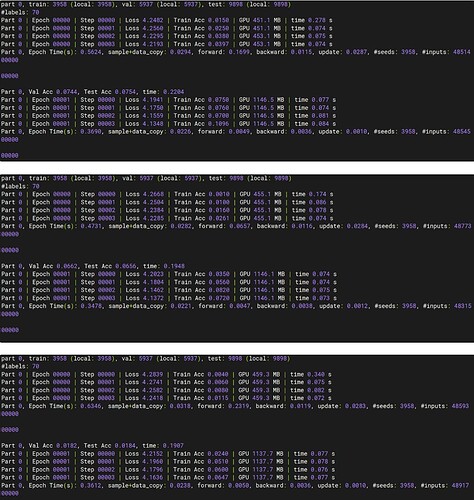

This is the train epoch information of four nodes

When there are 4 nodes, the “Mean step time” takes about twice as long as when there are 2 nodes.

Since the batch size is the same, it seems that the “Mean step time” should be similar, but the results are not.

Why do I see these results? Is there a communication problem?