Hi, I have tried the multi-gpu graphsage example in dgl by using use_uva=True. However, I notice that as the number of processes and GPUs increases, the CPU memory usage also increases. I believe that is caused by UVA such things. So I wonder it is possible to make UVA shareable in multi-gpu trainings?

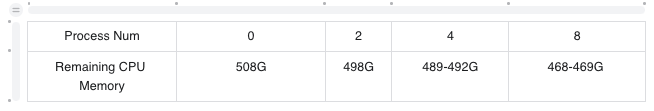

Here I paste the remaining CPU memory in the following table.