Hi there,

Could you please explain backward function of GSpMM? dgl/sparse.py at master · dmlc/dgl · GitHub

I am not understanding several things.

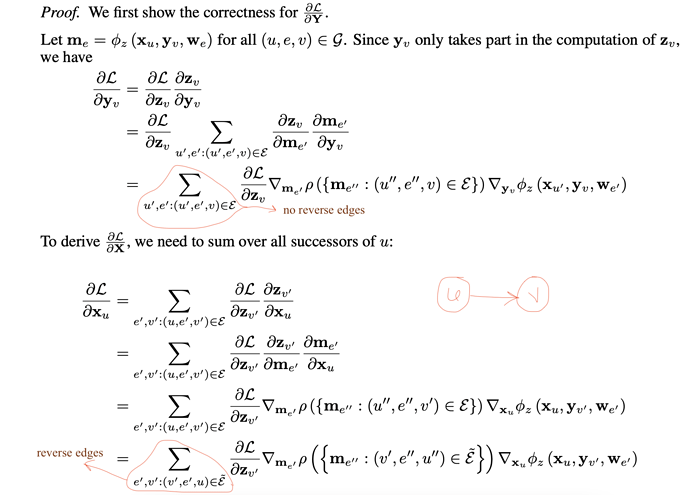

- Why input graph is reversed?

- If I use only sum as aggregation in the SpMM then what would be the corresponding backward operation and why?

- Why SDDMM is used in the backward function of GSpMM?

It would be great if you kindly provide a detailed description about it. It will be really helpful to understand.

Best,

Khaled