Hi all,

Question: My model has edge features, and I want to adapt the DGL Heterogeneous Graph Transformer (HGT) to use them. What is the best way to implement it?

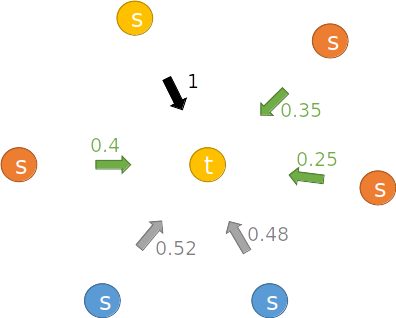

Context: Using source(s) / target (t) nodes, the HGT calculates K(s), Q(t), V(s) and then does apply_edges using K,Q to calculate the attention ‘t’ : sub_graph.apply_edges(fn.v_dot_u('q', 'k', 't')). It later calls the code below to forward the V * attention (message) to the target node, sums all of the source messages per edge type, and then take the mean across all summed edge type messages:

G.multi_update_all({etype : (fn.u_mul_e('v', 't', 'm'), fn.sum('m', 't')) \

for etype in edge_dict}, cross_reducer = 'mean')

I believe that I want to do is for the edge features to impact the V calculation (and possibly K), originally done based only on node features v = v_linear(h[srctype]).view(-1, self.n_heads, self.d_k). My guess is that V and K would have to be calculated during .apply_edges, and features would be concatenate(node_features, edge_features). My problem with that is that I imagine this would use way more resources, at least memory wise, because of the concatenation. I am new to DGL, so any ideas on how to implement it efficiently will be very well appreciated (my graph has millions of edges). Thanks!