My task is the graph-level but not just the node-level or edge-level classification.

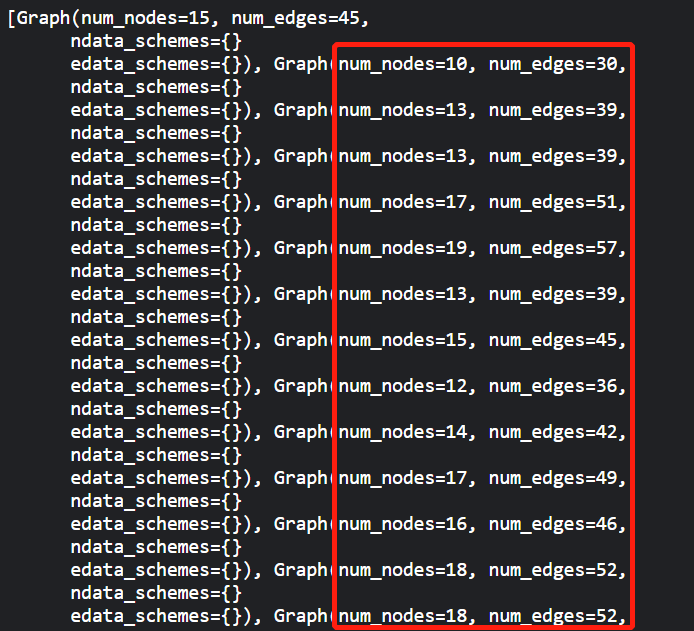

The graphs in the training and testing dataset are not the same. They are different in the shape of the graph i.e. number of nodes and edges like the MiniGCDataset dataset in the tutorial “Batched Graph Classification with DGL — DGL 0.2 documentation”. But additionally, I also take the node’s own feature into the consideration.

Therefore, it does not fit the original setting in the GCN faced with the fixed, single graph. But I find the size of weight matrix in the formulation proposed by GCN is not affected by the number of nodes, i.e. (node feature dimension, output dimension). So I am confused about the failure in the inductive learning of GCN. One explanation I heard is the Laplacian matrix that matters. But I am not so familiar with the theory. Still, I think it just a slightly different from the GraphSAGE with the mean aggregation function.

I am new to GNN. So I am quite confused about this.