Thanks for your clarification. There is one last thing that I want to confirm.

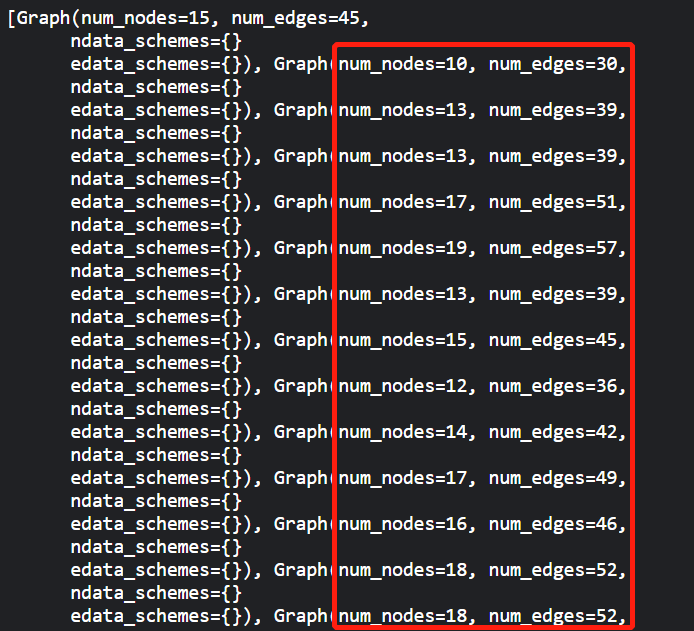

Whether I can just modify the dataset to mine in your tutorial Batched Graph Classification with DGL — DGL 0.2 documentation to do the inductive graph-level classification.

I am concerned about this because the dataset i.e. MiniGCDataset provided in this tutorial only contains the structure information but not the node’s own feature. Therefore, I guess the position of the nodes doesn’t matter.

But my dataset contains the node’s own features that indicate the node’s type additionally. So I am not sure whether the same type of node should be in the same position i.e. the alignment though in different graphs. In the original matrix multiply calculation form, it requires the input of the adjacency matrix. In this setting, I think the same type of the node should be in the same position of the adjacency matrix i.e. the alignment though they express different graphs.

But in DGL, I don’t find the explicit call of the adjacency matrix but like the “message passing” described in your design of the framework. So I think my problem of the position could be addressed using DGL.

Thanks for your help again. This really bothers me a lot but it is important.