The link of the tutorial is: Batched Graph Classification with DGL — DGL 0.2 documentation.

how the message passing is used in the training will decide the inductive and transductive

Thanks for your reply. But I want figure out whether the GCN could carry out the inductive learning with the task of the whole graph classification? I noticed that in the tutorial, graphs of different shapes were trained in the same batch. Batched Graph Classification with DGL — DGL 0.2 documentation

I am really confused how to make GCN do inductive learning. I heard someone said the “message passing” version of GCN could do the indcutive learning before. And in the tutorial, the adjacency matrix didn’t appear in the GCN defined.

Yes

After you train with a set of graphs from train set, use the test set which hasn’t been used in training to get the predictions.

That’s right. My task is the graph-level but not just the node-level or edge-level classification.

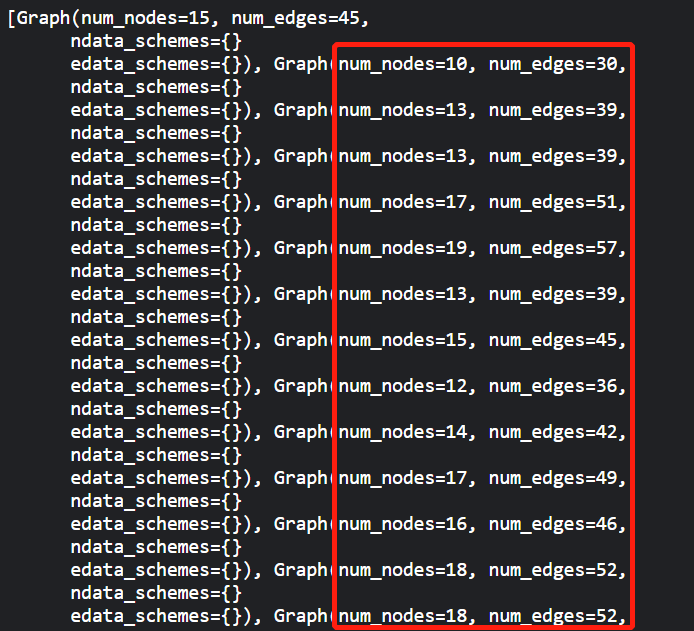

And the graphs in the training and testing dataset are not the same. They are different in the shape of the graph i.e. number of nodes and edges like the MiniGCDataset dataset in the tutorial above. But additionally, I also take the node’s feature itself into the consideration.

Therefore, it does not fit the original setting in the GCN faced with the fixed, single graph. But I find the weight matrix of the formulation proposed by GCN is not affected by the number of nodes, i.e. (node feature dimension, output dimension). So I am confused about the failure in the inductive learning of GCN. One explanation I heard is the Laplacian matrix that matters. But I am not so familiar in the theory. Still, I think it just a slightly different from the GraphSAGE with the mean aggregation function.

Thanks for your reply again. I am new to GNN. So I am quite confused about this.

- In most cases, matrix multiplication and message passing are just two ways to express the same computation.

- GCN can be used for inductive learning and as you said it’s just slightly different from GraphSAGE. For practical usage, make decisions based on the experiment performance.

Thanks for your reply. So DGL implemented GraphConv layer in the form of “message passing”? Does it belongs to inductive learning? If not, how can I implement a inductive GCN model using DGL for my graph classification task?

I find another discussion in the forum related to “message passing” Are there message-passing layers that can’t be used in graphs different than the ones they were trained in? - Models & Apps - Deep Graph Library (dgl.ai).

In transductive learning, you train and evaluate a model on the same graph(s). In inductive learning, you train a model on one set of graph(s) and evaluate it on another set of graph(s). It’s mostly not about the model you choose, but how you set up the experiment/datasets.

You can use DGL’s GraphConv for both transductive and inductive learning.

So it is only related to the data in the training and testing set? If the testing set contains unseen node, it belongs to inductive learning no matter what model it leverages?

I am clear about the difference between inductive learning and transductive learning. But I am confused about why GCN failed to do inductive learning compared with GraphSAGE and how to modify it using DGL to do the inductive learning. GraphSAGE states in the paper that GCN belongs to transductive learning. And I refer to other articles, it is because GCN does the spectral convolution?

I find the discussion in the forum that you said graph-level classification was naturally a inductive learning task. Does it mean if I apply GCN to this task, GCN can also do inductive learning? Transductive learning for GCN - Questions - Deep Graph Library (dgl.ai)

Thanks for your rely again.

So it is only related to the data in the training and testing set? If the testing set contains unseen node, it belongs to inductive learning no matter what model it leverages?

Yes.

But I am confused about why GCN failed to do inductive learning compared with GraphSAGE and how to modify it using DGL to do the inductive learning. GraphSAGE states in the paper that GCN belongs to transductive learning. And I refer to other articles, it is because GCN does the spectral convolution?

I don’t agree with that statement in the GraphSAGE paper. While GCN was partially motivated by Spectral Graph Convolution based on Laplacian matrices, its final form can be derived purely from the message passing perspective. Formally, all a single GCN layer does is h_i^{(l+1)}=\sum_{j\in\mathcal{N}(i)}\frac{1}{\sqrt{d_id_j}}W^{(l+1)}h_{j}^{(l)}.

I’ve used GCN for graph classification and it worked fine.

Thanks for your clarification. There is one last thing that I want to confirm.

Whether I can just modify the dataset to mine in your tutorial Batched Graph Classification with DGL — DGL 0.2 documentation to do the inductive graph-level classification.

I am concerned about this because the dataset i.e. MiniGCDataset provided in this tutorial only contains the structure information but not the node’s own feature. Therefore, I guess the position of the nodes doesn’t matter.

But my dataset contains the node’s own features that indicate the node’s type additionally. So I am not sure whether the same type of node should be in the same position i.e. the alignment though in different graphs. In the original matrix multiply calculation form, it requires the input of the adjacency matrix. In this setting, I think the same type of the node should be in the same position of the adjacency matrix i.e. the alignment though they express different graphs.

But in DGL, I don’t find the explicit call of the adjacency matrix but like the “message passing” described in your design of the framework. So I think my problem of the position could be addressed using DGL.

Thanks for your help again. This really bothers me a lot but it is important.

- This is a very out-dated tutorial with DGL 0.2.x while the latest version is 0.8.x. I recommend following this one instead.

- As you said, MiniGCDataset does not have pre-defined input node features. As a result, the tutorial used the node degrees as the input node features.

- What did you mean by “alignment” and “position”?

Thanks for the tutorial provided. If I want to do the graph-level regression, could I set the num_classes in the model as 1?

My concern is that one graph versus one feature dimension final might cause information loss if set the num_class=1? The downstream task is a regression problem, and the graph embedding serves as the input to it.

Additionally, the exact number of graph’s class is not known. They appear to belong to a number of classes respectively.

Thanks for the tutorial provided. If I want to do the graph-level regression, could I set the num_classes in the model as 1?

Yes, you can. You will also need to change the loss function and evaluation metric.

My concern is that one graph versus one feature dimension final might cause information loss if set the num_class=1? The downstream task is a regression problem, and the graph embedding serves as the input to it.

The final model output should have the same size as the label. Meanwhile, you can have larger hidden size to prevent information bottleneck.

Additionally, the exact number of graph’s class is not known. They appear to belong to a number of classes respectively.

I don’t understand this.

Things in the last sentence I want to express are that:

(1) the graph embedding is learned for the down-stream regression problem;

(2) fail to know how many classes exist among graphs, but tends to be many i.e. large value of “num_class”;

(3) could I set the “num_class” attribute larger to prevent information?

(4) or only set the “him_dim” larger as you recommended?

Then how do you want to train the model if the number of classes/task is also different?

This topic was automatically closed 30 days after the last reply. New replies are no longer allowed.