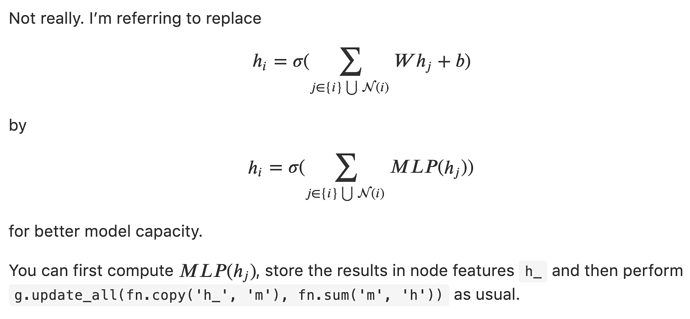

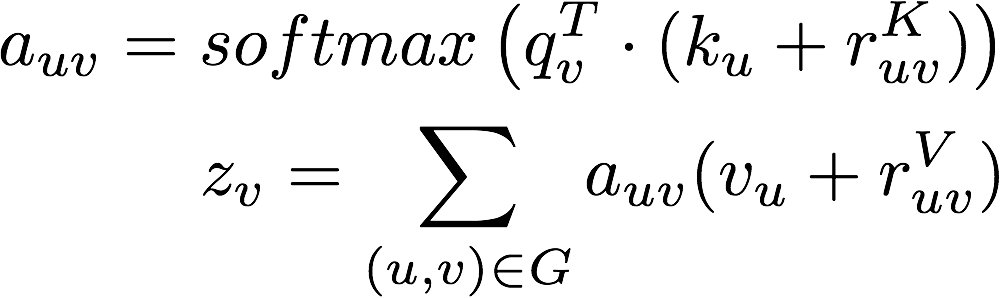

Yes exactly but how to instrument GAT for handling multiple features. Refer the Blog initiated by me Multiple Node & Edge features where i got some answer but not exactly.

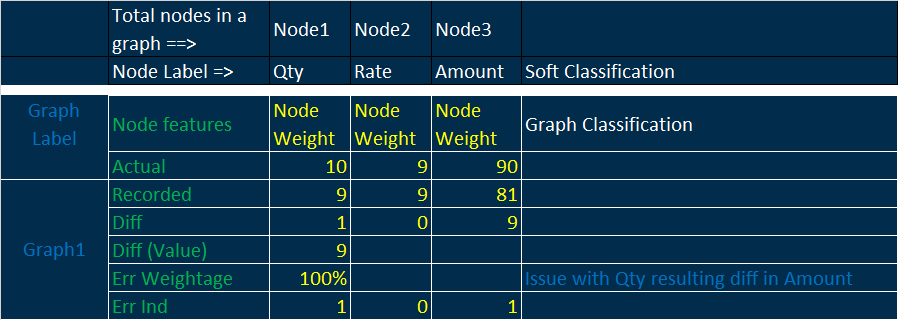

We are designing a Graph Representation learning model to spot patterns in detecting fraudulent transactions and also for defect detection/identification in finance domain.Here the patterns are detected/identified through connected nodes/edges of various critical parameters/indicators. Each nodes will have many features with corresponding values in it and even the Nodes/Edge label are also one of the factor in the representation learning.